My Docker and Kubernetes Learning

Heavily borrowed from Nana tutorial at:-

https://www.youtube.com/watch?v=3c-iBn73dDE

Basic Concepts about Docker:-

Imagine Docker as a magic box for your applications. As a programmer, you know how sometimes getting your code to run in different environments can be a headache. Docker swoops in to solve that problem by packaging up your entire application, along with all its dependencies, into a neat, self-contained unit called a container.

Think of containers like shipping containers: they hold everything your application needs to run, from the code to the libraries and even the runtime environment. This means you can create your application once, package it into a Docker container, and then ship it anywhere – whether it's your colleague's computer, a testing server, or even a cloud platform.

With Docker, you can say goodbye to the infamous "it works on my machine" problem. Since containers ensure consistency across different environments, you can be confident that your application will run the same way no matter where it's deployed.

Plus, Docker makes it easy to manage your applications. You can spin up multiple containers, scale them as needed, and even orchestrate them across a cluster of machines using tools like Docker Compose or Kubernetes.

In essence, Docker empowers you to focus on writing code without worrying about the nitty-gritty details of deployment and environment setup. It's like having a portable, self-contained package for your applications, making development and deployment a breeze.

Difference between containers and virtual machines

Docker containers and virtual machines (VMs) are both technologies used to run applications in isolated environments, but they have significant differences in how they achieve isolation and resource utilization:

Architecture:

Docker Container: Containers are lightweight because they leverage the host operating system's kernel. Each container shares the host OS kernel but runs in isolated user spaces. This shared kernel approach results in faster startup times and lower resource overhead.

Virtual Machine: VMs, on the other hand, emulate an entire computer system, including hardware such as CPU, memory, storage, and network interfaces. Each VM runs its own operating system, known as the guest OS, on top of a hypervisor, which manages the virtualized hardware resources. This approach creates a higher level of isolation but comes with higher resource overhead and slower startup times compared to containers.

Resource Utilization:

Docker Container: Containers consume fewer resources because they share the host OS kernel and don't require the overhead of emulating hardware.

Virtual Machine: VMs consume more resources due to the need for each VM to run its own guest OS and emulate hardware.

Isolation:

Docker Container: Containers provide process-level isolation, meaning each container runs its own processes and filesystem, but shares the same kernel with the host OS. This isolation is generally sufficient for most applications.

Virtual Machine: VMs provide stronger isolation since each VM runs its own complete operating system, including its own kernel. This makes VMs more suitable for running applications with strict security or compliance requirements.

Portability:

Docker Container: Containers are highly portable because they encapsulate the application and its dependencies into a single package. This package can run on any system that supports Docker, regardless of the underlying infrastructure.

Virtual Machine: VMs are less portable because they require a hypervisor that is compatible with the guest OS. Moving VMs between different hypervisors or cloud platforms can be more challenging compared to containers.

In summary, Docker containers offer lightweight, efficient, and portable application isolation, whereas virtual machines provide stronger isolation at the cost of higher resource overhead and less portability. The choice between Docker containers and VMs depends on factors such as performance requirements, resource utilization, and isolation needs.

Bonus Diagram- Kernel is part of the operating system which speaks with the hardware resources and does the core things like Process Management, Memory Management, Fils System Management, Security etc.

Kernel is not similar to OS, kernel is a part of OS which actually communicates to the hardware. Kernel is the one which actually read the data from the disk, or access the memory or any other device connected to the system. Kernel is the one who manages the running processes , allocates the memory and other resources to the processes.

an operating system (OS) is not only a kernel. While the kernel is a crucial component of an OS, an operating system typically consists of several layers of software beyond just the kernel. These layers collectively provide the necessary functionalities to manage hardware resources, facilitate user interaction, and support software applications.

Here are some common components of a typical operating system:

Kernel: As discussed earlier, the kernel is the core component responsible for managing hardware resources, providing essential services, and enforcing security policies.

Device Drivers: These are software modules that allow the kernel to communicate with specific hardware devices, such as disk drives, network interfaces, and printers. Device drivers provide an abstraction layer, enabling the kernel to interact with various hardware devices using a unified interface.

System Libraries: Operating systems often include libraries of reusable code that provide common functions and services to applications. These libraries abstract low-level operations, such as file I/O, network communication, and memory management, making it easier for developers to write software that runs on the OS.

System Services: OSes typically offer a variety of system services to support common tasks and functionalities. These services may include networking services, printing services, time synchronization, and user authentication.

User Interface: Most operating systems provide a user interface (UI) that allows users to interact with the system and run applications. This can range from a command-line interface (CLI) to a graphical user interface (GUI), depending on the OS and its target audience.

Utilities: Operating systems come with a set of utility programs that perform various system management tasks, such as file management, process monitoring, system configuration, and troubleshooting.

Applications: While not strictly part of the operating system itself, applications (such as word processors, web browsers, and games) run on top of the OS and rely on its services and resources to function.

Collectively, these components form a complete operating system that provides an environment for running software applications, managing hardware resources, and facilitating user interaction. The kernel serves as the foundation of the OS, orchestrating the interactions between hardware and software components and providing a platform for higher-level functionalities.

Docker interacts with the Kernel of the host operating system very closely.

Docker interacts with the kernel of the host operating system in several ways:

Containerization: Docker uses Linux kernel features like namespaces and control groups (cgroups) to create isolated environments called containers. These features allow Docker to control and isolate the resources (such as CPU, memory, and filesystem) allocated to each container.

Resource Management: Docker utilizes the kernel's capabilities for resource management to ensure that containers have access to the appropriate amount of CPU, memory, and other system resources. This involves leveraging kernel features like cgroups to limit and prioritize resource usage among containers.

Networking: Docker leverages kernel networking capabilities to provide networking functionalities to containers. It interacts with the kernel's networking stack to create virtual network interfaces, manage network namespaces, and configure network connectivity for containers.

Filesystem Management: Docker relies on the kernel's filesystem capabilities to provide containerized filesystems. It utilizes features such as UnionFS and overlay filesystems to create lightweight and efficient layers for container images.

In summary, Docker interacts closely with the kernel of the host operating system to implement containerization, manage resources, provide networking, and handle filesystem operations for containers.

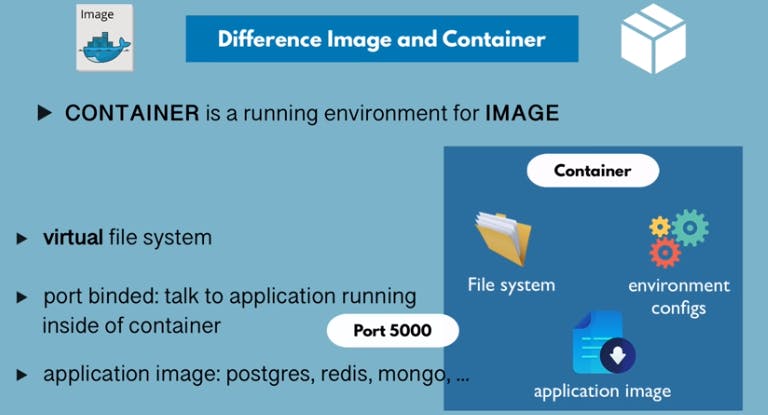

What is the difference between a container, image and pod:-

In the Docker world, containers, images, and pods are fundamental concepts, but they belong to different containerization technologies. Let me explain each:

Container: In Docker, a container is a runtime instance of an image. It encapsulates an application and its dependencies. Containers run isolated from each other and from the host system, providing consistency in different environments. They package an application's code, runtime, system tools, system libraries, and settings.

Image: An image is a lightweight, standalone, and executable software package that includes everything needed to run an application: the code, runtime, libraries, environment variables, and configuration files. Images are created using a Dockerfile, which contains instructions to assemble the image. Images are immutable, meaning they cannot be changed once they are built, but they can be used to create multiple containers.

Pod: In the Kubernetes world, a pod is the smallest deployable unit. It represents one or more containers that should be run together on a single host. Containers within a pod share the same network namespace, including IP address and port space, and can communicate with each other using localhost. Pods are managed by the Kubernetes scheduler, which ensures that they are running and healthy.

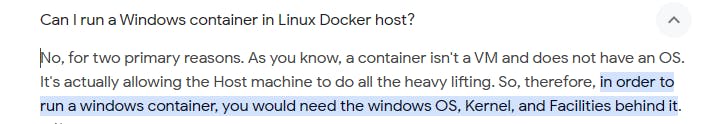

A Statement: Docker on Windows supports both Windows containers and Linux containers. Aren't windows containers supported on Docker running on linux host OS ?

No, Windows containers are not supported on Docker running on a Linux host operating system. Docker containers are based on the host operating system's kernel, meaning that a Linux host can only run Linux containers, while a Windows host can run both Windows and Linux containers.

When Docker is installed on a Linux host, it utilizes the Linux kernel's features for containerization, such as namespaces and cgroups, to manage and isolate Linux containers. These features are specific to the Linux kernel and cannot be used to run Windows containers.

Conversely, when Docker is installed on a Windows host, it can leverage Windows-specific technologies like Windows Server Containers and Hyper-V Containers to support Windows containers alongside Linux containers. This allows Docker on Windows to run both types of containers seamlessly on a Windows host operating system.

....... Enough of quick deep dives.. Lets go step by step :D

Wait.. some more probing questions...

Why is an image needed.. cant we just always jump from a dockerfile to container ?

the Dockerfile is the recipe to create an image

the image is a virtual filesystem

the container is the a running process on a host machine

You don't want every host to build its own image based on the recipe. It's easier for some hosts to just download an image and work with that.

Creating an image can be very expensive. I have complicated Dockerfiles that may take hours to build, may download 50 GB of data, yet still only create a 200 MB image that I can send to different hosts.

Spinning up a container from an existing image is very cheap.

If all you had was the Dockerfile in order to spin up image-containers, the entire workflow would become very cumbersome.

An image is not a runtime environment; instead, it's a static snapshot of a filesystem and configuration that forms the basis for creating a containerized runtime environment.

Images are typically created from Dockerfiles, which contain build instructions for assembling the filesystem and configuring the environment. These instructions define how the image should be constructed, specifying base layers, dependencies, environment variables, and other settings.

While images themselves are not executable, they serve as deployable units that can be instantiated into running containers. When you run a container from an image, Docker creates a writable layer on top of the image's filesystem, allowing the container to make changes during runtime while preserving the underlying image intact.

Docker images are built using a layered architecture, where each instruction in the Dockerfile adds a new layer to the image. This layered approach enables efficient caching, incremental builds, and sharing of common dependencies across multiple images.

In essence, an image is a packaged representation of an application's filesystem and configuration, designed to be instantiated into a containerized runtime environment.

\==== Useful docker commands:-

\========= Docker Images =========

Build an Image from a Dockerfile

docker build -t <image_name>

Build an Image from a Dockerfile without the cache

docker build -t <image_name> . –no-cache

List local images docker images.

Delete an Image docker rmi <image_name>

\========= Docker Hub =========

Login into Docker

docker login -u

Publish an image to Docker Hub

docker push /<image_name>

Search Hub for an image

docker search <image_name>

Pull an image from a Docker Hub

docker pull <image_name>

\========= Docker Containers =========

Create and run a container from an image, with a custom name:

docker run --name <container_name> <image_name>

Run a container with and publish a container’s port(s) to the host.

docker run -p <host_port>:<container_port> <image_name>

Run a container in the background

docker run -d <image_name>

Run docker container on a specific named network and not relying on the default docker network:-

docker run -p 3306:3306 --net my-network --name cards-database -e MYSQL_ROOT_PASSWORD=samplePassword -d mysql:8.0

Start or stop an existing container:

docker start|stop <container_name> (or )

Remove a stopped container:

docker rm <container_name>

Open a shell inside a running container:

docker exec -it <container_name> sh

Fetch and follow the logs of a container:

docker logs -f <container_name>

To inspect a running container:

docker inspect <container_name> (or <container_id>)

To list currently running containers:

docker ps

List all docker containers (running and stopped):

docker ps --all

View resource usage stats

docker container stats

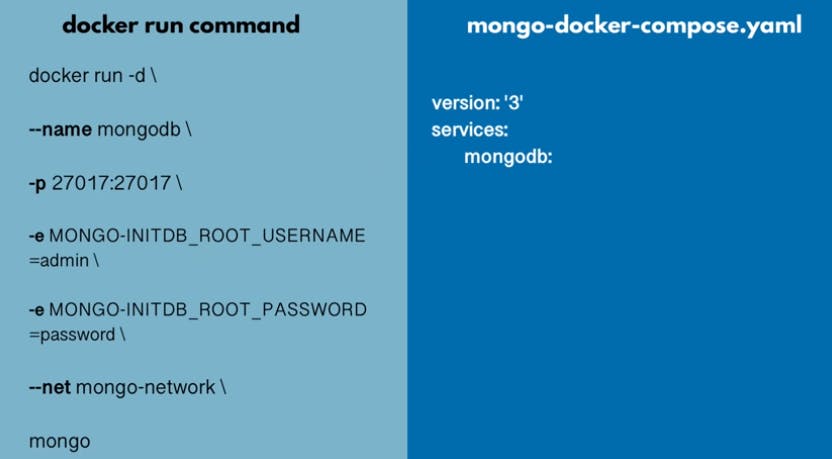

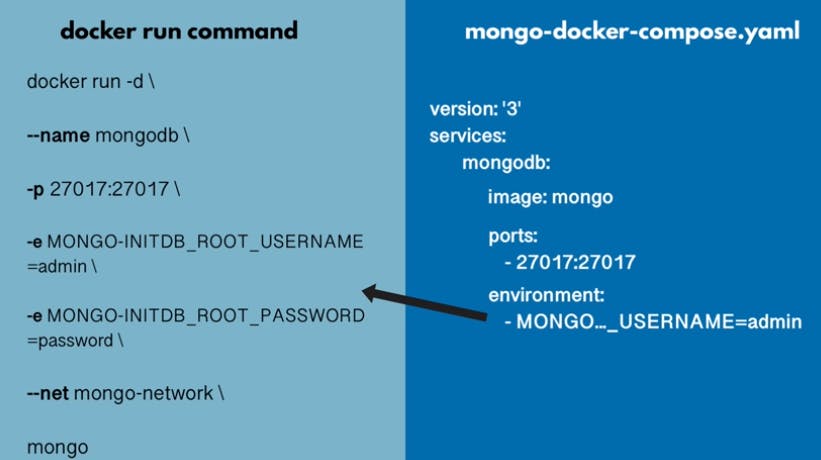

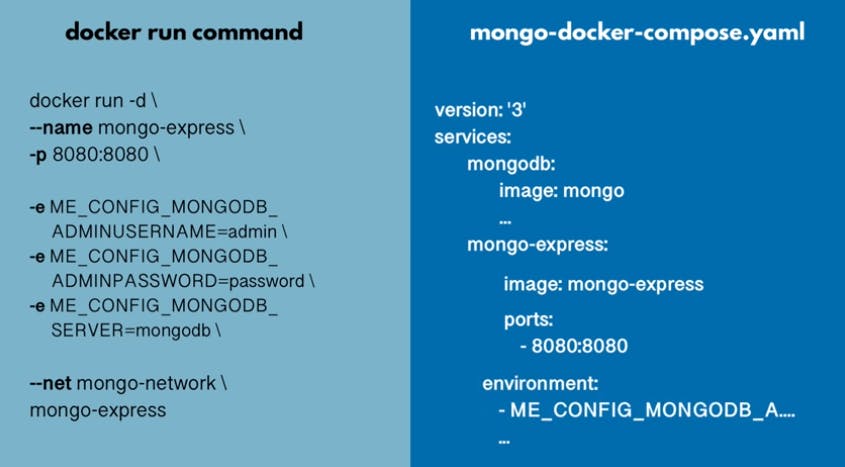

\================= Docker Compose ================

Docker Compose is a tool for defining and running multi-container Docker applications. It allows you to use a YAML file to configure the services your application depends on and then spin up all those services with a single command. Here are several reasons why Docker Compose is valuable:

Simplifies setup of multi-container applications with

docker-compose.Manages dependencies and configuration easily using YAML.

Defines services, networks, and volumes with

docker-compose.yaml.Ensures consistent behavior across environments.

Integrates seamlessly with Docker CLI commands (

up,down,exec).Facilitates streamlined development and deployment workflows.

\==================

Understanding by example:-

docker-compose cheatsheet:-